Optimizing application performance- Developing well-architected serverless applications with AWS Lambda

Table of Contents

When a new serverless development project is on track with outdated practices and processes, it might be destined from the outset. So, it needs a significant shift in mindset. Here, we have discussed 6 pillars of the Well-Architected Framework of AWS. And also discussed how you can implement them for building efficient, high-performing, secure, serverless systems. Nowadays, organizations need to upgrade their methods and apps to deliver digital experiences to many people, serverless being one such approach. Now, the tech executives are rethinking their strategy in response to the wish to lower overall operational costs and overhead and boost agility. They need to consider how they can design serverless flawlessly, easily, and effectively.

By design, AWS Lambda functions are ephemeral and stateless and are the foundation of the serverless applications created on AWS. They carry out their operations on AWS-managed infrastructure. Also, this architecture can power and support a wide variety of application workflows. Considering all these factors, it is urgent to reconsider how serverless applications must be designed and reduce latencies and improve their dependability. Also, there is a need to look into the process of developing a durable platform for enforcing security policies and battling failures. All needs to be done by not maintaining any complex hardware. This article introduces the best practices for serverless apps to prosper in these competitive markets. With the carefully developed, well-architected framework of AWS, these practices are aligned. Let us get started.

6 Pillars of a Well-Architected Framework

The well-Architected AWS Framework is an assortment of principles focusing on six key aspects of any application that impact businesses significantly.

1. Sustainability: For addressing the long-term societal, economic, and environmental impacts of your business and also to guide the businesses to maximize resource use, design applications, and establish sustainability goals.

2. Cost Optimization: To ensure that running systems provide business value at a lower price point.

3. Performance: For powering applications and workloads to use computing resources efficiently. Also, to maintain efficiency and system requirements at peak levels according to the demand.

4. Reliability: Ensuring that the workloads perform their intended function consistently and correctly when expected.

5. Security: To protect and secure data, assets, and systems and also to make utilization of the total potential of the cloud technologies for enhanced security.

6. Operational Excellence: To provide the ability to support the run workloads and development effectively. Moreover, to gain a deeper understanding of the operations. Also, improve supporting processes for delivering business value.

Process of implementing the pillars for serverless applications

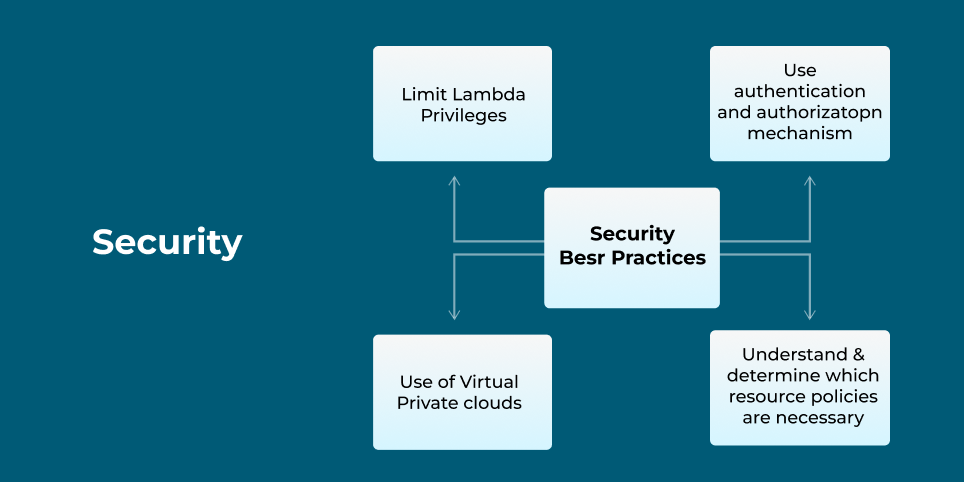

1. Security

Serverless applications are much more valuable to some security issues. This is because these apps are solely based on FaaS (Function as a Service), and the third-party libraries and components are always connected via events and networks. So as a result of which, the exploiters can damage your applications easily or also can embed them with vulnerable dependencies. There exist different methods that enterprises might incorporate into their daily routines. Also, they can address these difficulties as well as secure their serverless application from probable threats.

Limiting Lambda privileges

We are assuming that we have set up our serverless app on AWS and are also managing infrastructure and reducing costs with AWS Lambda functions. Then, using the principle of the least privilege, we might reduce the danger of Lambda over-privileged functions. This principle provides IAM roles, and this role provides us access to solely the resources and services we need to finish the task.

For instance, some tables like Amazon RDS needs the permission of CRUD. But some tables might require read-only permissions. As a result, these policies limit the possibility of authorization for particular actions. Securing serverless apps is quite simple when you grant various roles to every Lambda function with its own set of permissions.

Using Virtual Private Clouds

With the help of Amazon Virtual Private clouds, users can deploy the AWS resources in the personalized virtual network. This includes various capabilities to safeguard your serverless application. For instance, to configure the virtual firewalls with the security groups for the traffic control from EC2 instances and relational databases. With the use of VPCs, also, you can decrease the number of loopholes and exploitable entry points significantly. These entry points and loopholes can expose your serverless applications to security threats.

Understanding and determining the vital resource policies

Users can implement resource-based restrictions to secure services with limited access. The policies can safeguard the service components by specifying access roles and actions. You need to utilize them as per various identities, such as function event source, version, or IP address. These policies are being retrieved at the IAM level before the AWS service implements authentication.

Using an authorization and authentication mechanism

Using authorization and authentication techniques is necessary to manage and regulate access to specific resources. A well-designed framework suggests using this mechanism for the serverless APIs in serverless applications. These security measures, when used, confirm a user’s and a client’s identity. It determines whether they have access to a particular resource. These methods thus make it quite simple to stop unauthorized users from interfering with the service.

2. Operational Excellence

Operational Excellence is among the highest ROIs that users will ever experience.

Using operational metrics, business, and applications

Identifying the key performance indicators, which include operations, customer, and business outcomes, are important. Also, you will quickly receive a higher-level picture of the performance of an application. Moreover, it is important to evaluate the performance of your application concerning the objectives of the business.

For example, let us take into consideration an e-Commerce business owner who is interested to learn the KPIs that are linked to the customer experience. It helps to emphasize the total effectiveness of how the users utilize the app when you notice fewer transactions in the user’s e-commerce applications. Also, it includes ease of selecting a payment method, duration of the checkout process, and perceived latency.

The operational indicators enable users to monitor the operational stability of their applications over time. The stability of the app might be maintained that uses various operational KPIs. They can be resolution time, feedback time, delivery, continuous integration, etc.

Understanding, analyzing, and alerting on metrics that are provided out of the box

You first need to investigate and then analyze the behavior of AWS services that are used by your application. For instance, AWS service provides a set of some common metrics right out of the box. These metrics help you to track the performance of the applications. These metrics are automatically generated by services. You only need to begin tracking your applications and set up your own unique metrics.

You need to determine what AWS services are utilized by your application. For instance, you need to consider one airline reservations system. This uses Amazon DynamoDB, AWS Step Functions, and AWS Lambda. These AWS services now reveal metrics to Amazon CloudWatch when a customer requests a booking. It performs so without affecting the performance of the application.

3. Reliability

Managing failures and high availability

These systems have a noteworthy probability of getting periodic failures. The chances are high when one server is dependent on another. Though the services or systems do not fail completely, they suffer partial failures occasionally. So, applications have to be sustainably designed to handle these component failures. The application architecture must be capable of self-healing fault detection.

For example, transaction failures may happen when more than one component is available or where there is a lot much traffic. AWS Lambda is built for handling faults and also is fault tolerant. Now, if the service encounters any kind of difficulty calling a particular function or service, it invokes the function in a diverse Availability Zone.

Amazon DynamoDB and Amazon Kinesis data streams are an illustration to understand reliability. Several attempts are being made by Lambda to read the total batch of objects when reading from the two data sources. Until the records reach the maximum age that you define on an event source mapping or expire, again, these retries are made. In this arrangement, a failed batch could be split into two batches using event sourcing. The smaller batch retries get around timeout problems and isolate corrupt records.

When any non-atomic actions occur, it is a great idea to evaluate the deal and respond with programmatically partial failures. For instance, if a minimum of one record is ingested successfully and BatchWriteItem for DynamoDB and PutRecords for Kinesis returns a successful result.

Throttling

The throttling method may be used to prevent APIs from receiving too many requests. For example, Amazon API Gateway Throttles demands your APIs. Also, it restricts the number of queries a client might submit within a particular time frame. All the clients who employ the token bucket algorithm are contingent upon these restrictions. API Gateway constrains both bursts of requests submitted and the steady-state rate.

A token will be taken out of the bucket with every API request. The number of concurrent requests are being determined by the throttle bursting. While restricting the excessive use of the API, this strategy minimizes system degradation and maintains system performance. For example, consider a global, large-scale system with millions of users. It obtains a noteworthy volume of API calls each second. Also, it is important to handle all the API queries, which can result in the systems performing poorly and lagging.

4. Performance Excellency

Reduce the cold starts

Less than 0.25% of the AWS Lambda request are these cold starts. However, they have a noteworthy impact on the application performance. The code execution occasionally can take 5 seconds. Though, in real-time, a big application that operates and also need to be implemented in milliseconds, it occurs more frequently.

Reducing the quantity of these cold starts is advised by the well-architected best practices for the performance of AWS. While considering various factors, you will be able to do this, like, for example, how quickly your instances begin. It is dependent on the frameworks and languages you use. Compiled languages, for example, launch more quickly than interpreted ones. Programming languages such as Go and Python are quicker than C# and Java.

As an alternative, you must aim for fewer functions that enable a functional separation. Lastly, it makes sense to import the dependencies and libraries necessary for processing the application code. You can import specific services instead of the complete AWS SDK. For example, when your AWS SDK involves Amazon DynamoDB, you can import the Amazon DynamoDB.

Integrate directly with the managed services over functions

With the use of the native integrations among the managed services is pretty good. Apart from using the Lambda functions, no data transformation and custom logic are required. The native integrations help achieve optimal performance within the minimum resources for managing.

Now let us take Amazon API Gateway for example. With that, you will be able to use the AWS integration kind natively to connect to the rest of the other AWS services. Similarly, while using AWS AppSync, you can use Amazon OpenSearch, direct integration with Amazon Aurora, and VTL service.

In the tech industry, serverless architecture has been gaining popularity as it helps in reducing complexity and operational cost. Various experienced tech individuals understand the best practices of serverless application development.

5. Cost Optimization

Required practice: Minimize function code and external calls initialization

Understanding the reputation of initializing a function is quite important. This is because when any function is called, all its dependencies are also imported. All the libraries you include will slow down your application when using multiple libraries. So, removing dependencies and minimizing external calls wherever feasible is important. This is because it is quite impossible to avoid them for the operations such as ML as well as other complicated functionalities.

You must first recognize and then limit the resources your AWS Lambda functions access when in use. It might have an immediate effect on the value that is offered for each invocation. So, it is vital to lessen the dependence on the rest of the managed applications and third-party APIs. Occasionally, functions can leverage application dependencies, but it might not be proper for ephemeral environments.

Review code initialization

The time required for AWS Lambda to initialize the application code is reported by the AWS Lambda in the Amazon CloudWatch Logs. You must use these metrics for tracking the performance and price. This is because the Lambda functions bill is based on the amount of time spent and the number of requests made. By reviewing the code of your application and its dependencies, it is important to improve the total execution time.

Moreover, you may make calls to the resources outside the environment of Lambda execution and then use the responses for the subsequent executions. It is also advisable to employ TTL mechanisms in specific circumstances within your function handler code. This method makes it quite possible to collect data preemptively that is unstable without the need to make any additional external calls that will add to the execution time.

Sustainability

The newly introduced and last well-architected sixth pillar of AWS is sustainability. However, like other pillars, it also contains questions evaluating your workload. It evaluates the architecture, design, and implementation, improving efficiency, and keeping energy consumption.

The AWS customers can reduce the associated energy usage by around 80%, which concerns typical on-premises deployment. Because of the capabilities that AWS offers to its customers for achieving higher server utilization, cooling and power efficiency, continuous efforts for powering AWS operations with a total of 100% renewable energy by the year 2025, and custom data center design.

Sustainability for AWS refers to facilitating particular design principles while you are designing your applications on the cloud:

- AWS allows and emphasizes perfect-size every workload for maximizing energy efficiency.

- It is vital to understand and measure the related sustainability impact and business outcomes to evaluate improvements and establish performance indicators.

- AWS continuously recommends evaluating your software and hardware choices for efficiency and choosing flexible technologies and design for flexibility over time.

- AWS recommends setting long-term goals for every workload and designing an architecture and model ROI to reduce the impact of each unit of work. For instance, operation or per-user to achieve sustainability at the granular levels.

- AWS reduces the energy and resources needed for using your services and also lessens the requirement for your consumers to upgrade their devices

- AWS use managed, shared services to reduce the infrastructure required for sustaining a wider range of workloads.

Wrapping Up

In this blog, I and my team have hereby introduced best practices for following AWS Well-Architected Framework for serverless applications. Also, we provided some instances to make it look effortless to follow the framework. But suppose you wish to know whether your existing workloads and applications are placed correctly or follow the best practices post the remediation stage? Then, it is a great idea to connect with seasoned AWS professionals. And when your application is scanned for well-architected review, you will be having a step-by-step roadmap. It will suggest how to optimize operational excellence, performance, costs, and the rest of the aspects that your business prioritizes the maximum.